Overengineering Log Analisys With Functions

My Struggle With Logs In Text Format#

Engineers read lots of logs. If you're a software engineer, there's a reasonable chance you're actually doing that on a daily or weekly basis, desperately going through .log files. I won't bother here about the details of why it can be boring, or tiring. If I dig into that part you may fall asleep mid-journey, just like I do when reading logs myself.

But whenever I'm barely awake trying to diagnose an issue reading text logs, I really like a "tell a story" approach. So usually I do lots of greps, finds, searches, regexes, trying to capture just the information I think is relevant. And as that process goes on (I guess it's kind of iterative) I try to make sense of all of that using a timeline of events. Sometimes you may want to highlight certain pieces of information, you may want to correlate pieces of information, you may want to join pieces of information. In some cases I use a separate note editor, just to sketch out the story I'm trying to tell.

And note this is a very specific step of the whole log analysis process. I'm not talking about alerts, or getting different log sources together. I'm talking about debugging, focusing on what's the cause of a problem or error, that's when you're trying to understand different flows, making notes, coming up with hypotheses. We're talking about what we do at the end of the log analysis pipeline. And more than often, the tools used for this job are usually text editors or scripts.

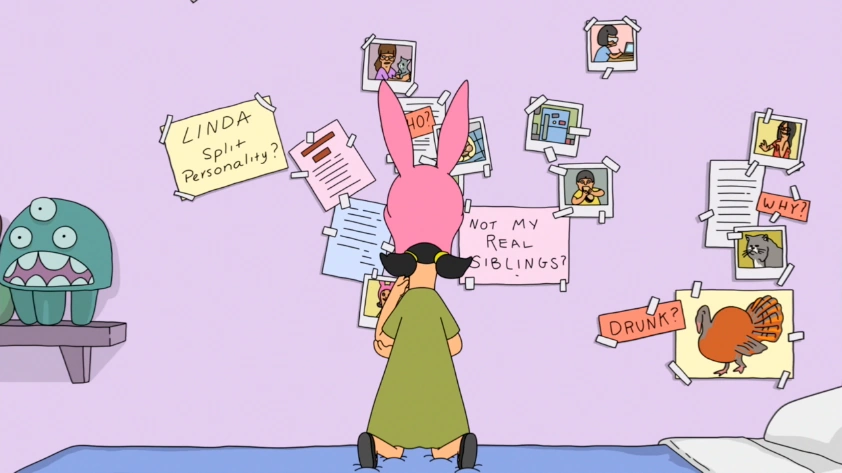

This story board phase of the process is when I think some of the tools I use fall short. When I'm trying to tell my story, when I'm looking at the full picture I'm building, trying to make sense of things, I don't think I get enough help from the tools I have available.

Standard Text Editors#

These, of course, are meant to be general purpose editors. Some of them will have poor performance searching or dealing with huge buffers. You do have good alternatives like VIM with some extensions or Notepad++ on Windows. Still, even when you have advanced filters available, regex and multiple views, they're still focused on editing, not annotations or creating associations between log lines.

Scripts and Structured Logging#

Scripts are the usual first "go-to" option once people notice their product is getting too complex. It happens mainly when you have small teams. Unfortunately they're hard to maintain as logs are usually something extremely dynamic, log lines are always changing. And they're usually a single pass thing, not interactive enough for a story telling tool.

Structured Logging ends up working, most of the time, just as an aid to scripts (and sometimes as an aid to more robust tools too, for querying useful information). Still, it just makes search easier, and when search doesn't help correlating information, it also doesn't help with the story telling process.

Distributed Tracing#

Distributed tracing doesn't belong here, I believe. And the reason this is here is that distributed tracing popped up while I was discussing these ideas with a friend. They questioned the role of distributed tracing systems in such scenarios. Distributed tracing is indeed a huge help in terms of sorting and joining log information together, mainly when you have complex distributed systems. Though, in the end, it won't tell the story alone, and it won't give you any extra organizational aids besides what it already does. It's one useful step, but it happens before the analysis part.

And please reach out to me if you're familiar with any other alternatives that makes sense for log analysis in that interactive way I mentioned.

Maybe this means it's not a real problem?The few I've seen were paid and/or seemed incomplete.

Given all that, of course I couldn't resist. As a software engineer, you start thinking what could be improved or added to these tools. And that naive glimpse of "I could create my own tool, of course" took over. After all, I'm always looking for an excuses to write things in Rust as I'm learning the language at the moment.

But let's park that idea for the minute. For now, let's digress move on and talk about an interesting functional approach used by a graphics tool called Graphite.

Graphite and its Functional Approach#

- Weren't we talking about logs? What the heck?

Hold on with me please, and listen to my personal anecdote if you have some spare time to waste.

I was listening to the RustShip podcast a while ago and that was when I heard about Graphite. Graphite is a raster and vector editor for digital art-work. Lots of people use similar tools, and they're something quite common. But there's a neat thing about it. The authors say it's a "state-of-the-art" environment, and the reason is how they approach raster and vector graphics. Instead of using the idea of layers of raster graphics keeping the state of your work, they use a graph (or actually a digraph) of operations (functions) that act on different sources to produce a final product. This basically means, instead of holding the state of your art-work in pixels, it holds that information in chained operations. Note that the final product can then be rendered in any resolution or size, or with certain parameters tweaked for different types of action nodes.

%%{init: {'theme':'neutral'}}%%

flowchart LR

Image((Image))-->Mask((Mask))

Shape((Shape))-->Stencil((Stencil))

Stencil-->Mask

Mask-->Transform((Transform))

Transform-->Blur((Blur))

Blur-->O1((Output1))

Transform-->O2((Output2))

This is basically a functional approach (as stated by the authors). You have a graph where nodes are functions (as in mappings) that work on their input, keep the original source of information untouched (it's immutable), and spill out some result just to be consumed by the next functions in this same way. This is an abstraction well known by people that use functional languages or understand mathematical functions. This gives us some quite interesting properties for graphic work:

- Parameterizable. It's so easy to create templates. Once you have your graph ready, just changing some of the node configurations or inputs can create just the variations you need in the output. The authors give an interesting example of a trading card game in the podcast episode, where given a processing graph, all you need is the textual inputs for the information that goes onto the cards. And notice that experimenting with different parameters is quite easy, it's just a matter of tweaking nobs. Finally, as we're not talking raster graphics, you can generate the final product images in any sizes you want, no information is lost. The size of the output image is just another parameter.

- Replicable. The art-work can be replicated with any canvases. If you had the wrong canvas at first, no bother, you just change it. Exporting the graph is like passing on all the information from your work,

- Cacheable. It's basically easy to check if an input of a node changed or not, and in certain scenarios you can have them cached. Without cycles, this is even easier.

- Concurrent. It's easy to identify the pieces of image processing that can happen in parallel. As nodes won't share information between them, that also helps.

- Composable. It's easy to compose different setups for your graph. Certain branches can easily be reused for different cases.

Functional Approach for Text Log Analysis#

We'll now get back to logging, and you may already know where I'm going with this. What if we overengineered a log tool using those concepts we see in Graphite? Lots of those properties that arise from the functional approach are desirable also for text log analysis. Chains of filters and mappings are basically one of the first things you do when looking at logs. And this approach may not only help with filtering, or those previous steps that happen before the story telling process. Such properties may also be useful during the story telling process, while you're building up your story board.

When we say "function approach", that can be taken in a very abstract way. I'll apologise in advance if I'm missing formalities here, but let's recap the properties we captured above from the Graphite's project. Let's unravel these properties, and then check how they apply to log analysis. Both to the filtering steps and the story telling step.

Regarding the Graphite's properties we listed previously, they're generally summoned from fairly basic functional traits:

- Functions don't share data with other functions.

- Functions don't mutate data.

- Functions don't hold state.

- Functions have well-defined inputs and outputs.

And this is the way they correlate to the list:

| Property | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| Parameterizable | 🗸 | |||

| Replicable | 🗸 | 🗸 | ||

| Cacheable | 🗸 | 🗸 | 🗸 | |

| Concurrent | 🗸 | 🗸 | ||

| Composable | 🗸 |

These are all properties that are desired in text log analysis. You want to be able to select important information, usually through filtering, so you need parametrization. You want to apply the same diagnosis process throughout different text log files, so you want your methods to be replicable. You'll be dealing with huge amounts of text, so making it cacheable might be a good idea. You'll have different flows for different types of logs, you'll want different information when debugging different problems, so make it composable.

Instantiating those to the real world, we can try to describe what a graph of functions would look like in our use case. We could have different types of nodes. Ordinary nodes would pretty much be functions that map a pair $(T_i, M_i)$ to another pair $(T_o, M_o)$, where $T_i$ and $T_o$ are respectively text input and metadata input, producing $T_o$ and $M_o$, as the text and metadata outputs. Text input can be something like log lines, metadata can be anything that may help the poor engineer to tell their story.

%%{init: {'theme':'neutral'}}%%

flowchart LR

A((...)) -->|"(Ti,Mi)"| B((Standard\nNode))

B-->|"(To,Mo)"|C((...))

Source nodes can map a time range to text and metadata, working as input to nodes like described above. We can also have sink nodes that will receive the text and metadata and present it to the user. Having multiple sink nodes can be very useful to keep information organized and available at the same time, and sinks can be selective about what metadata is being rendered.

And helping with composing, splitter and merger nodes could... Well, split or merge streams of text and meta-data on the same basis of inputs and outputs as described for the other nodes, only as a difference using multiple text/meta-data pairs as input or outputs.

%%{init: {'theme':'neutral'}}%%

flowchart LR

A((Source)) --> H((...))-->|"(Ti,Mi)"|B((Splitter))

B-->|"(To,Mo)"|C((Sink1))

B-->|"(To',Mo')"|D((Sink2))

E((...))

F((...))

G((Merge))

E-->|"(Ti,Mi)"|G

F-->|"(Ti',Mi')"|G

G-->|"(To,Mo)"|I((...))

Helping The Story Telling Process#

Engineers are also pretty good at wasting time finding problems for their solutions. Even though this sounds more like a longer way to achieve the perfect interactive log analysis tool, we may get some relevant traits from this model that might help with the story telling bit.

- Log files are big. An interactive log analyzer needs to meet some baselines for responsiveness. Luckily log files are easily indexable in memory, as ordering may be a fair assumption given their timeline structure generally. That summed up with caching and multiprocessing, should help meeting these constraints.

- Having multiple filters working at the same time is tempting. Multiple views are easily available with this pattern and should also be fairly performant if done correctly. The user can have one view looking at the bigger picture, another one looking into a more detailed life cycle analysis separately. And they could also be merged and split if necessary.

- Persisting these graph structures makes the filtering and annotations easy to recover, and they would only need the log file content when rendered. That can also be used as a way to obtain templates for story boards one would use for particular problems.

All of this, without a responsive and decent UI would go to waste as we've been talking a lot about "interactive" anyway. I'm very tempted to try this model though as a pet project. And the most important thing for an open source project I already have: the name. It's going to be called storytellers. And you may find the repo on my GitHub profile (probably, at this point, already miserably forgotten along lots of other unfinished personal projects).